Volume 6, Issue 1 (Continuously Updated 2023)

Func Disabil J 2023, 6(1): 0-0 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Hosseini Kalej S Z, Amiri Shavaki Y, Abolghasemi J, Sadegh Jenabi M. Direct Observation of Procedural Skills for the Clinical Evaluation of Speech Therapy Students in the Assessment of Speech Organs. Func Disabil J 2023; 6 (1) : 241.1

URL: http://fdj.iums.ac.ir/article-1-203-en.html

URL: http://fdj.iums.ac.ir/article-1-203-en.html

Seyede Zahra Hosseini Kalej1

, Younes Amiri Shavaki1

, Younes Amiri Shavaki1

, Jamileh Abolghasemi2

, Jamileh Abolghasemi2

, Mohammad Sadegh Jenabi *3

, Mohammad Sadegh Jenabi *3

, Younes Amiri Shavaki1

, Younes Amiri Shavaki1

, Jamileh Abolghasemi2

, Jamileh Abolghasemi2

, Mohammad Sadegh Jenabi *3

, Mohammad Sadegh Jenabi *3

1- Department of Speech and Language Pathology, Rehabilitation Research Center, School of Rehabilitation Sciences, Iran University of Medical Sciences, Tehran, Iran.

2- Department of Biostatistics, School of Health, Iran University of Medical Sciences, Tehran, Iran.

3- Department of Speech and Language Pathology, Rehabilitation Research Center, School of Rehabilitation Sciences, Iran University of Medical Sciences, Tehran, Iran. ,ms.jenabi4@gmail.com

2- Department of Biostatistics, School of Health, Iran University of Medical Sciences, Tehran, Iran.

3- Department of Speech and Language Pathology, Rehabilitation Research Center, School of Rehabilitation Sciences, Iran University of Medical Sciences, Tehran, Iran. ,

Full-Text [PDF 1085 kb]

(404 Downloads)

| Abstract (HTML) (1567 Views)

Full-Text: (537 Views)

1. Introduction

Evaluation is one of the crucial aspects of educational activities that transform education from a static state to a dynamic process. The results of the evaluation help to identify the positive aspects and weaknesses of the training path, which can be useful in changing and fixing the defects and thus making educational changes and corrections. Therefore, one of the critical goals of evaluation is to increase the quality and productivity of education [1, 2]. Evaluating students’ clinical performance provides information to judge students’ skills related to clinical work. Therefore, evaluating students’ clinical performance is considered one of the complex tasks of professors and clinical instructors for health professions [3, 4]. Since professional speech therapy is practical, students need knowledge, information, and various psychomotor skills to have a proper clinical performance. Therefore, a specific evaluation and test program is implemented to judge the student’s competence in the practical skill. Improving the educational process at all levels is related to continuous evaluations and the necessary interventions based on their results. It is due to such effects that the use of tested and more accurate methods is emphasized by experts [5]. Many common clinical assessment methods cannot fully assess students in clinical settings and only evaluate the small amount of information obtained after a short-term pre-examination study. Therefore, the student cannot identify the defects and try to correct them [6, 7, 8]. Currently, methods, such as objective structured clinical examination (OSCE), portfolio, mini-clinical evaluation exercise (Mini-CEX), and direct observation of procedural skills (DOPS), which are performance-based, are recommended to evaluate students’ procedural skills [9]. Considering that speech therapy is a practical profession, evaluation by direct observation of clinical skills in the real clinical environment ensures the ability of students to provide appropriate clinical services and face clinical events in special patient conditions [10].

Articulation of speech sounds is necessary to express words and sentences. Without speech organs, articulation of speech sounds is impossible. Therefore, the assessment of speech organs is a critical part of a complete evaluation. Oral examination and interpretation of results require basic science and knowledge of the anatomy and physiology of the oral structure. The goal of this assessment is to identify or rule out structural or functional factors associated with different types of communication or swallowing disorders. In the evaluation of speech organs of the building, the range of motion and speed and strength of each organ, such as lips, teeth, tongue, jaw, and soft palate, are of interest to the examiner. The examiner must have comprehensive knowledge and clinical skills related to the structure and function of speech organs.

This study was conducted to prepare a DOPS tool to evaluate students’ skills in the assessment of speech organs.

2. Materials and Methods

The research population included the speech therapy students of the Faculty of Rehabilitation Sciences, Iran University of Medical Sciences, who were undergoing clinical internship units. The data were collected via the convinience sampling method. The participants included 20 students. This study was cross-sectional with a non-interventional descriptive-analytical method. To collect data, the checklist of clinical skills evaluation form was used through direct observation. To prepare the DOPS evaluation form, the Robbins-Kelly oral motor control instructions were used. Then, the desired DOPS form items were determined and scrutinized according to available literature and also using the opinions of the faculty members of speech therapy specialists. The number of selected items was 18 from 21 predetermined items. To check the content validity, the opinions of ten experts (7 speech therapy faculty members and 3 doctoral students of speech therapy of the Faculty of Rehabilitation Sciences) were used.

Each item’s clarity, simplicity, and importance were determined for face validity. The impact score index was used, which was calculated for each item separately. To check the ratio of content validity to necessity and usefulness and to check the content validity index, the simplicity, clarity, and relevance of the checklist questions were investigated. To determine the reliability, the agreement between two expert evaluators and speech therapy faculty members was used. Evaluators observed any student’s performance and judged their clinical skill by determining a score between zero and ten according to the prepared form. Score 0 was equal to unacceptable, scores 1-3 mean lower than expected, scores 4-6 mean borderline, scores 6-9 mean within expected limits, and score 10 was above expected. The data obtained from the questionnaires was extracted and statistically analyzed by SPSS software, version 25. The reliability coefficient and the internal correlation coefficient were used.

A briefing session was held to train the examiners, and the examiner’s guide in the DOPS evaluation was in written form. Scoring instructions, a checklist guide, and the necessary criteria were provided to examiners. This instruction was provided for more reliability and homogenization of the examiners’ judgment. The participants were trained in the form of a written guide, including the research objectives, the DOPS evaluation method, the type of procedures, the names of the examiners, and the skills evaluation checklist in one session. Whenever the students felt that they had acquired the necessary competence in the relevant skill, the examiner was asked to evaluate their performance. Each test took approximately 15 minutes, and after completion, about 5 minutes were spent providing feedback to the students to discuss their strengths and weaknesses. Finally, their level of satisfaction was examined by the final part of the checklist that measures the satisfaction of students and evaluators.

3. Results

Face validity

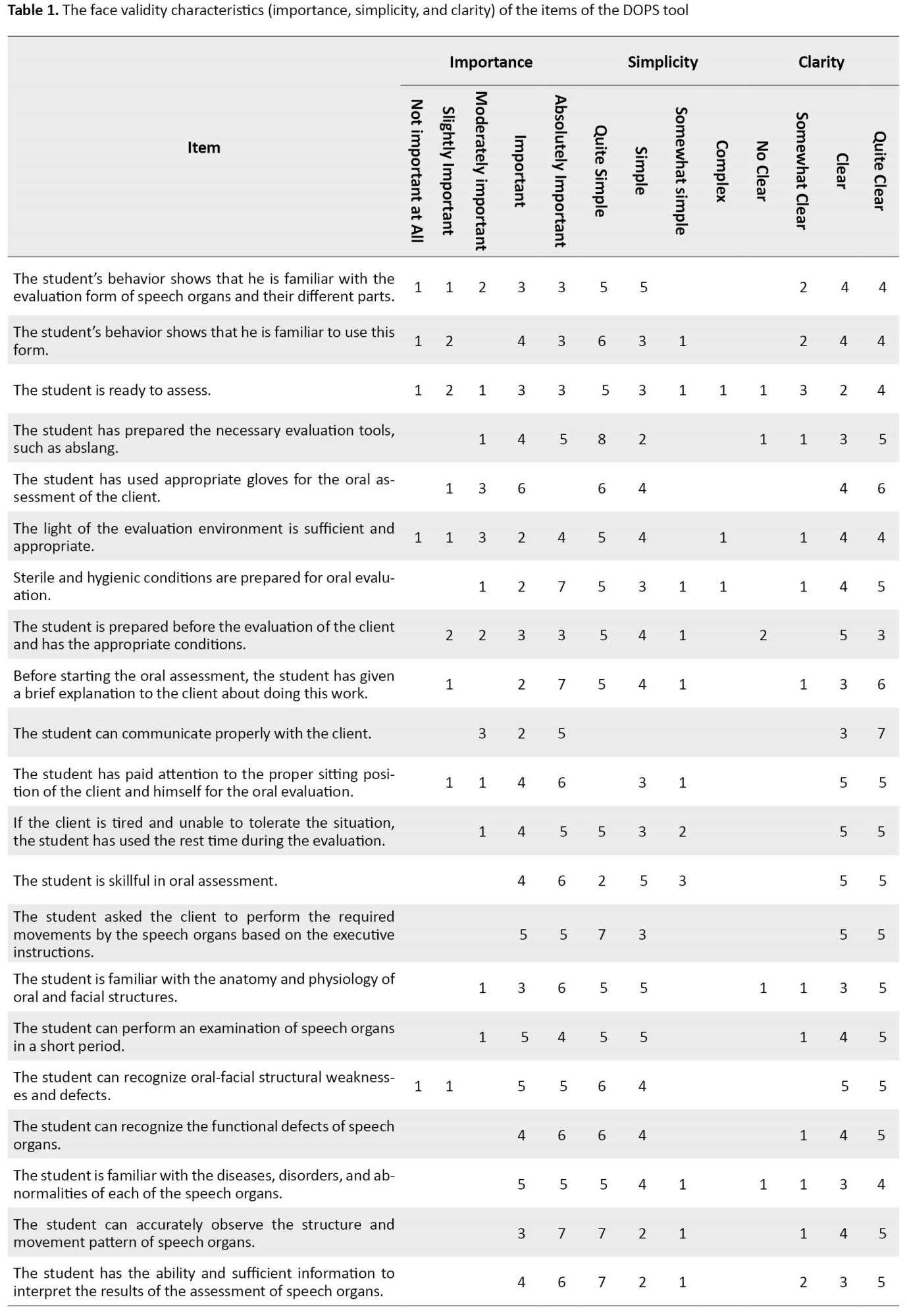

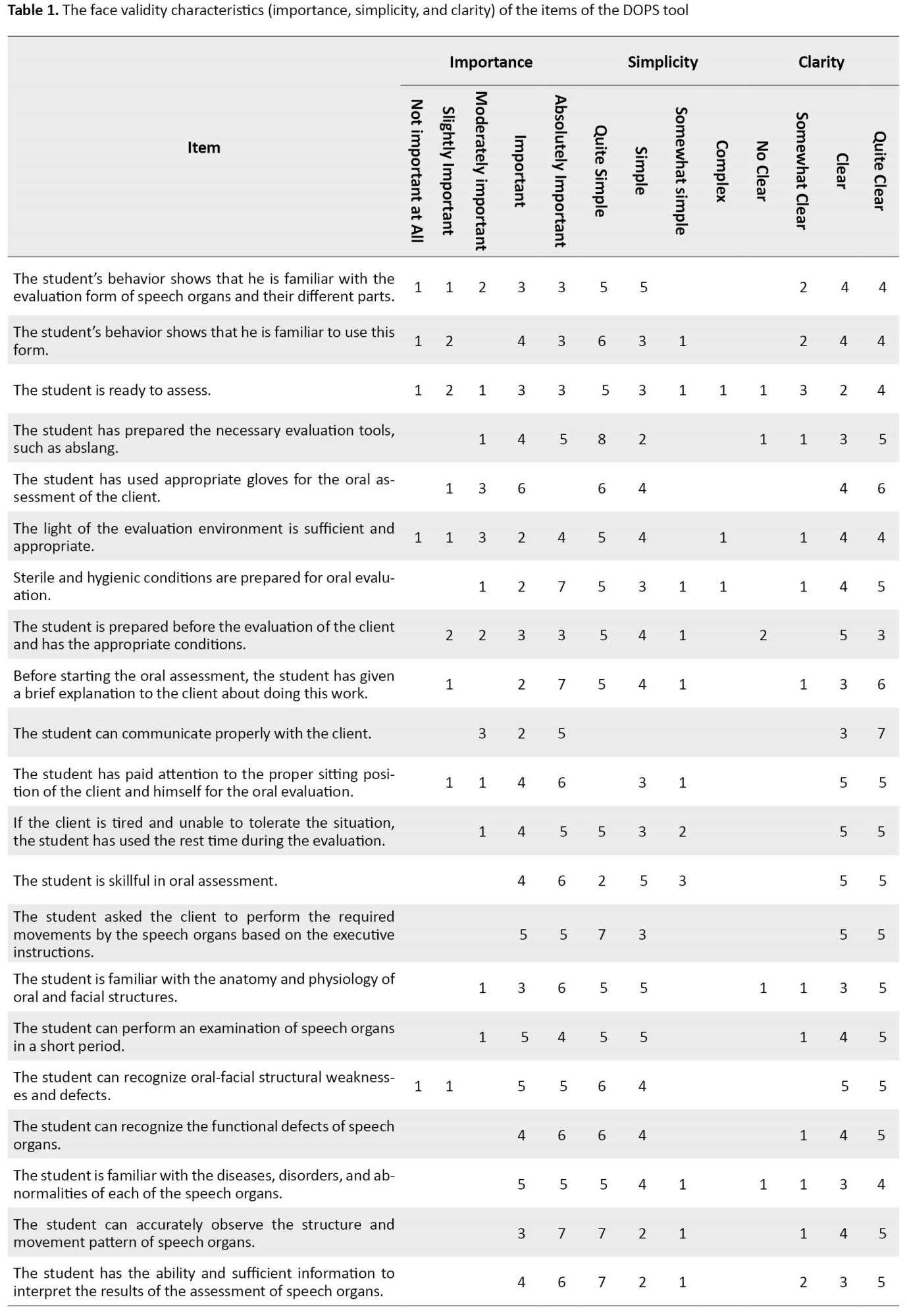

The face validity of the DOPS test was confirmed in evaluating the procedural skills of a real patient according to the opinions of experts in the field of speech therapy. According to Table 1, the degree of simplicity, clarity, and importance of the test questions was examined to determine the face validity of the items.

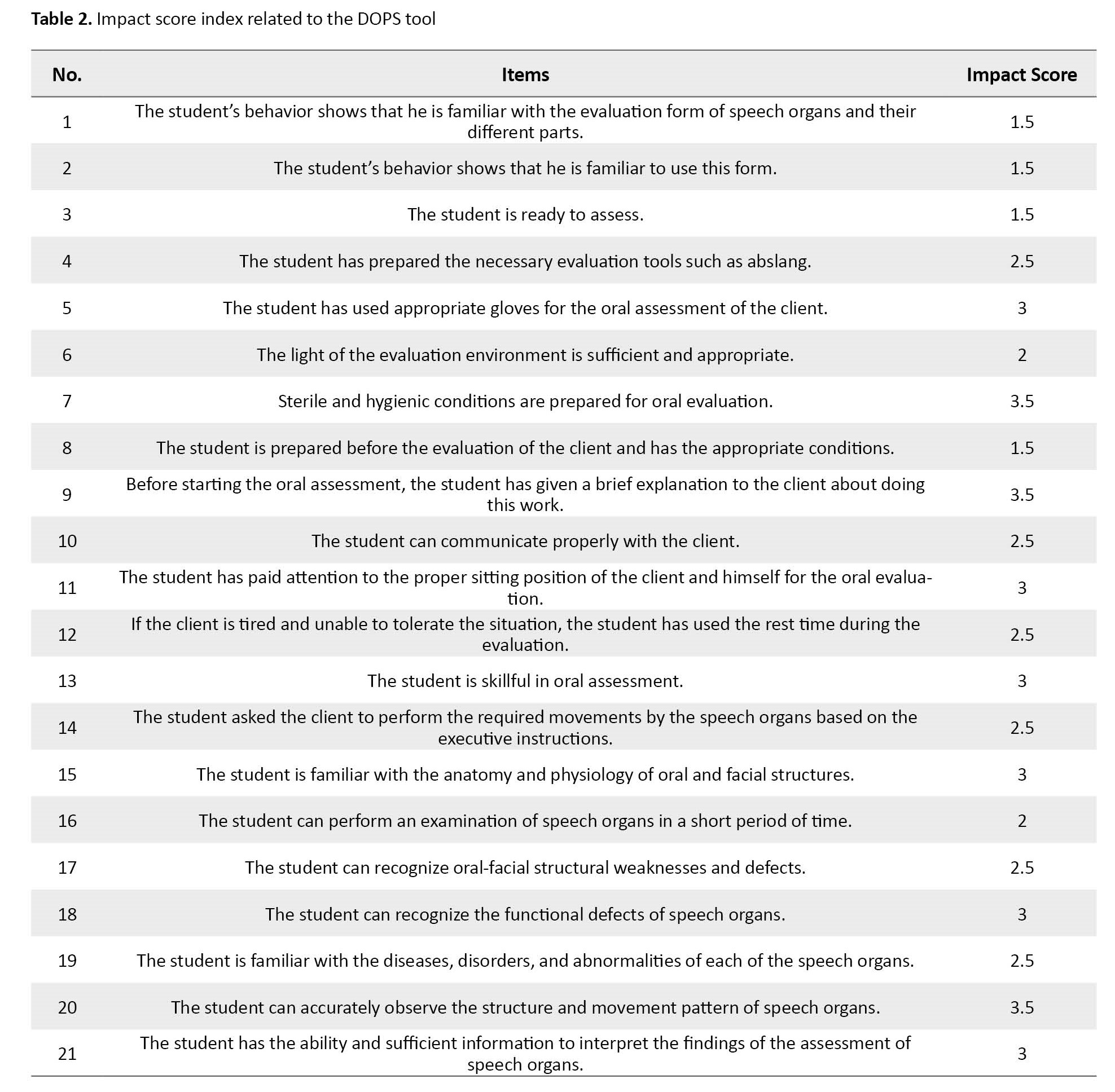

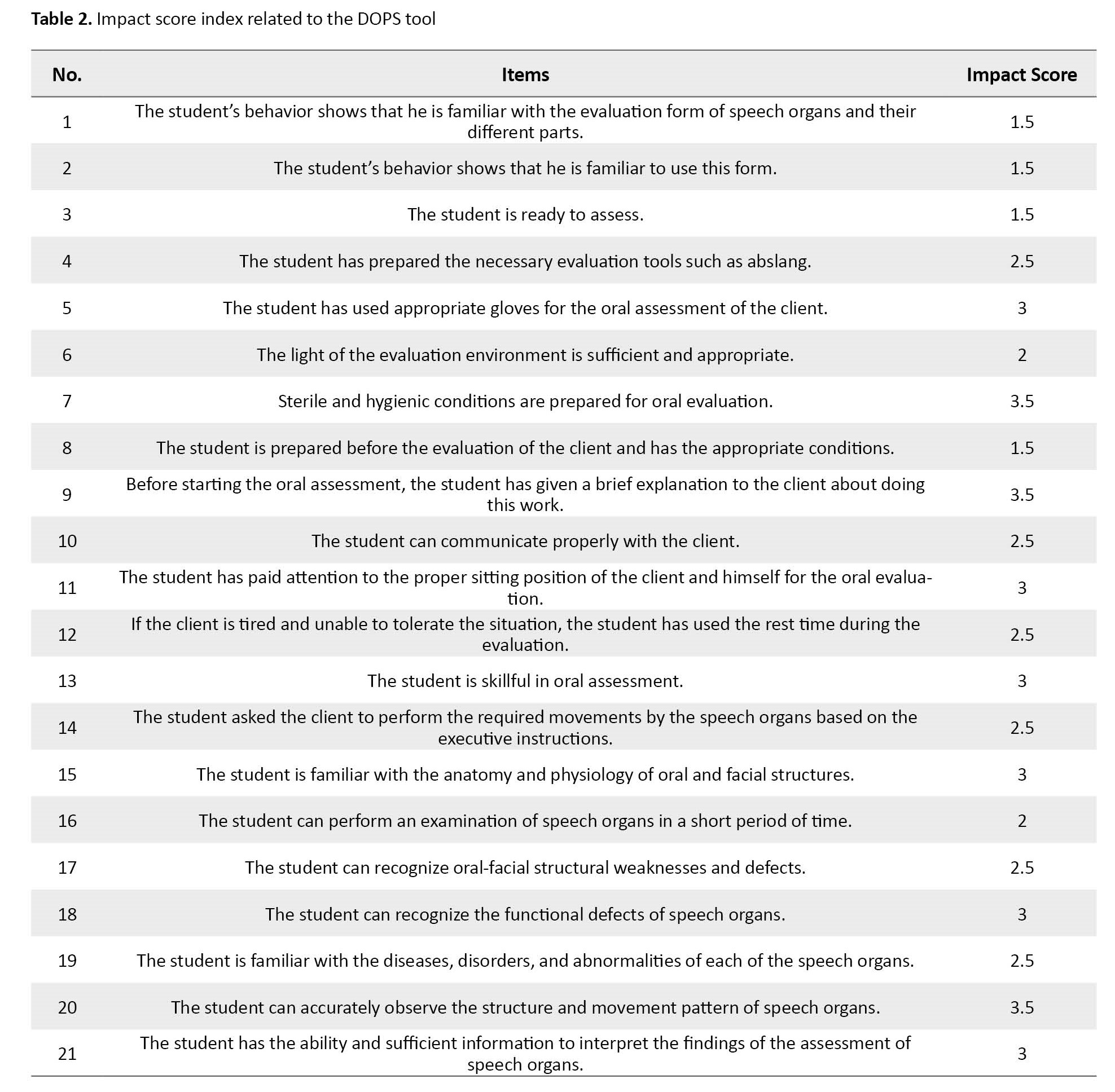

Then, their impact score was calculated. As seen in Table 2, the impact scores of all the items were >1.5; therefore, they are favorable regarding face validity and were included in the questionnaire.

Content validity

In this study, according to Table 3, among the 21 test questions, for three questions, the value of the content validity ratio was <0.62, the content validity index was <0.80, and these questions were removed.

In the rest of the items (85%), the content validity of the DOPS test was calculated. Each item’s content validity index (CVI) was over 0.8, and the content validity ratio (CVR) for each item was over 0.62. According to the Lawshe table, calculated CVI and CVR were favorable regarding content validity.

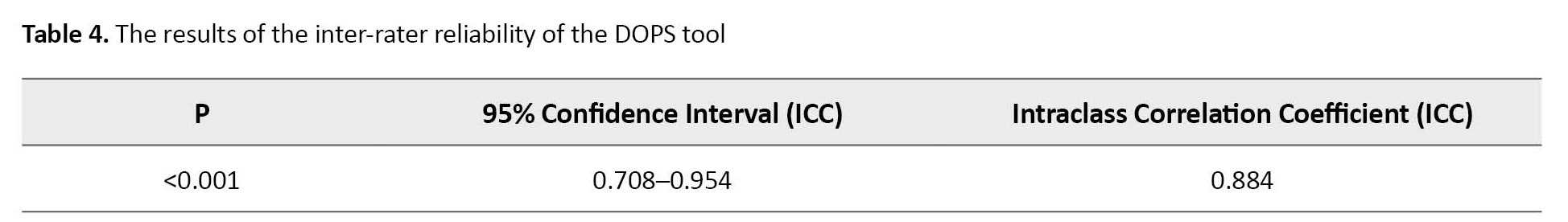

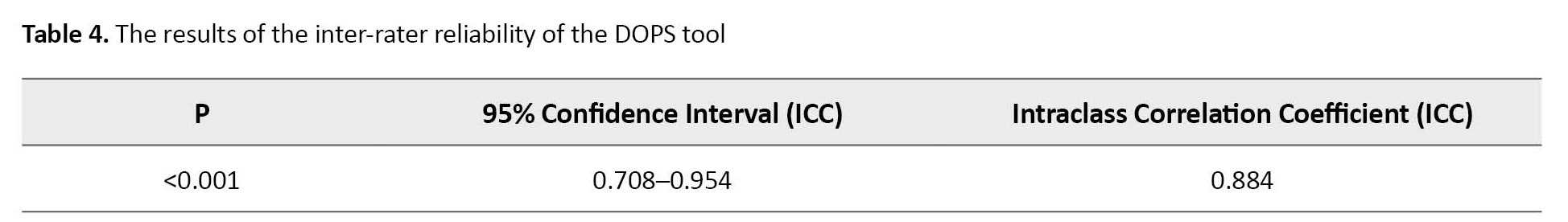

Reliability

To determine the reliability (agreement between evaluators) that two evaluators were used simultaneously, the reliability coefficient and internal correlation coefficient were used. The intraclass correlation coefficient (ICC) was calculated using the opinions of two evaluators for 20 students, and the ICC value was 884/ 0 with a 95% confidence interval (0.708-0.954), (P<0.001), which indicates a good agreement between the raters and a reason for good reliability (Table 4).

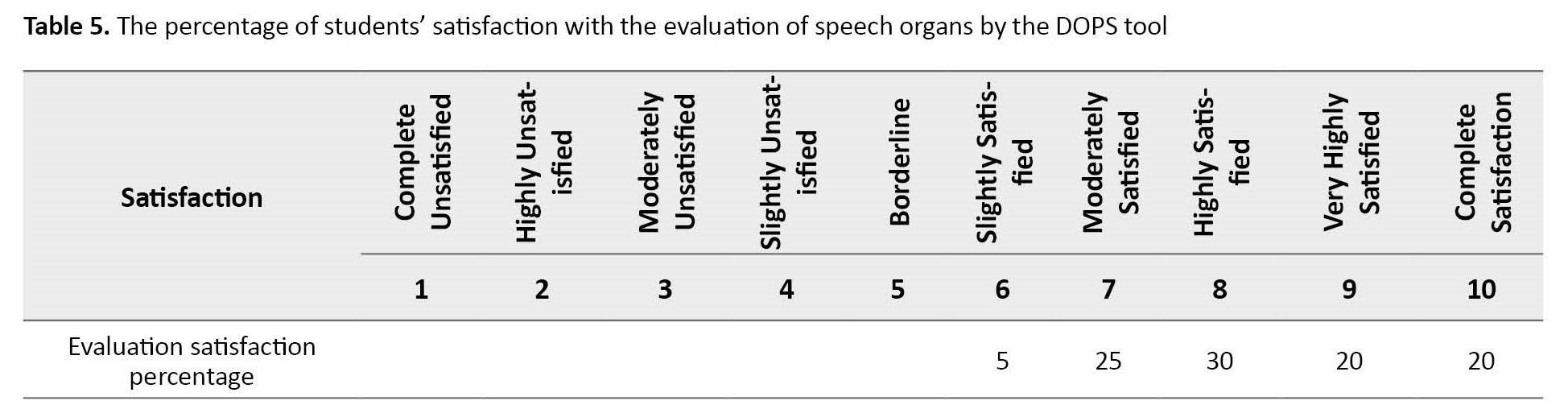

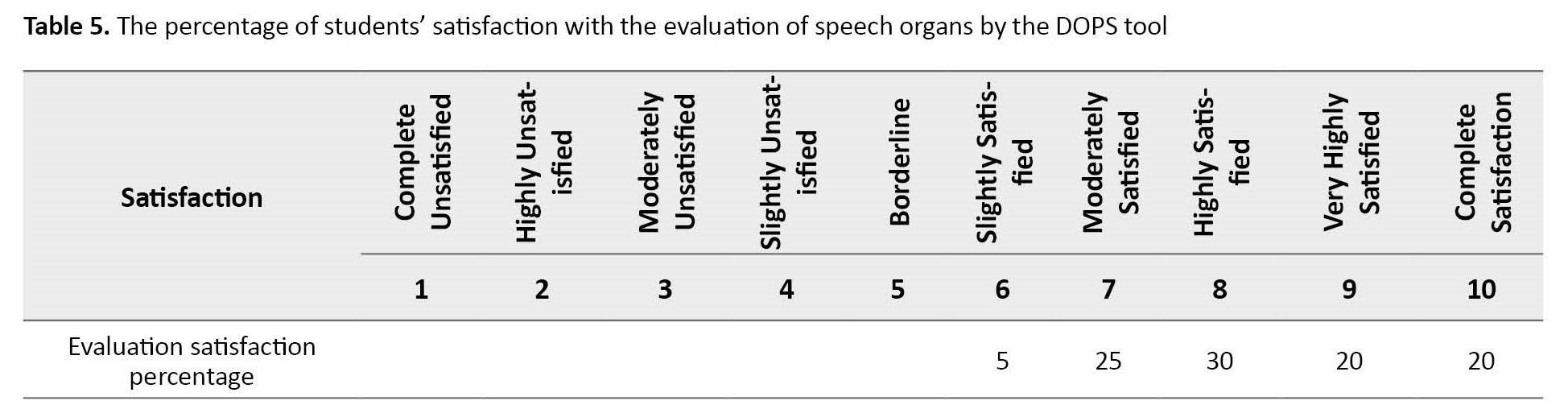

Finally, the DOPS tool examined the percentage of satisfaction of students and evaluators in evaluating the clinical skill of assessing the speech organs. According to Table 5, all students chose the option of slightly satisfied to completely satisfied, and the largest percentage (70%) for the options was “high satisfaction” to “complete satisfaction”.

According to Table 6, none of the evaluators chose the options of no satisfaction to “slightly satisfied”, and the majority of satisfaction was between the options of “high satisfaction” and “full satisfaction”.

As a result, the students were satisfied with the evaluation of the clinical skill of evaluating the speech organs in the field of speech therapy with the DOPS tool, and also the evaluators were satisfied with the evaluation of the clinical skill of the students of the speech therapy field with the DOPS tool.

4. Discussion

The present study›s results confirm the validity and reliability of the DOPS test performed on speech therapy students. In this test, experts in speech therapy have been used for face validity. They have confirmed the evaluation of clinical skills through DOPS on a real patient, which is consistent with most studies conducted in this field. Rozbahani et al. investigated the validity and reliability of the DOPS test in evaluating the clinical skills of audiology students at the Iran University of Medical Sciences. The face validity of the DOPS test in evaluating students’ procedural skills while working with a real patient was confirmed by extracting the opinions of audiologists [11]. In a study conducted by Wilkinson et al. at the Royal College of Medicine in England regarding the validity of the DOPS test in educational programs, the experts concluded that the DOPS has high face validity [12]. In this research, on the topic of content validity, among 21 questions, for three questions, the value of CVR was <0.62, and CVI was <0.8, therefore these questions were removed. The CVI value of questions (85%) is 0.8 or more, and they are favorable regarding content validity. This result is based on the study conducted by Sarviyeh et al. at the Faculty of Nursing and Midwifery of Kashan University of Medical Sciences. In its results, the content validity of the DOPS test using the content validity index is >0.75, and the content validity ratio is >0.50, which is reported as consistent [22]. It is also consistent with the results of a study conducted by Jalili et al. in Iran to evaluate nursing students’ clinical skills using the DOPS method. This study showed that the DOPS test is a suitable method to evaluate psychological skills. Due to its high validity, reliability, and acceptance, it is suitable to evaluate all aspects of students’ performance [13]. Also, the results of this research regarding content validity are consistent with a study conducted by John-Roger Barton et al. in England to screen colon cancer with the DOPS test. Its validity and reliability was 0.81 [14].

In this study, all the students have chosen options from slightly satisfied to completely satisfied, and the largest number (70%) have chosen options from high satisfaction and complete satisfaction. Also, none of the evaluators chose the options of no satisfaction to slightly satisfied, and their choice was between the options of high satisfaction and complete satisfaction. These results are consistent with the study conducted by Sahebalzamani et al. in the field of nursing at Zahedan University to study and research the acceptability of DOPS, which showed that 75% of faculty members and 70% of students were satisfied with the test. It seems that DOPS effectively evaluates clinical skills and is also accepted among faculty members and students [15]. Also, the results of this research regarding satisfaction with Farajpour et al.’s study titled “satisfaction of medical interns and professors with the implementation of the DOPS test at the Islamic Azad University of Mashhad in 2013” showed that the feasibility, educational effects, and satisfaction were significantly high from the student’s point of view. Satisfaction from the point of view of examiners also had a significantly high score, which is consistent [16]. In this study, two speech therapists were used for inter-rater reliability. The ICC was calculated using the opinions of two evaluators. The ICC value was 0.884 with a 95% confidence interval (0.708-0.954) (P<0.001). These results indicate that the test is reliable and an appropriate agreement exists between the raters. This result is consistent with the study conducted by Sahib Sahebalzamani et al. entitled “validity and reliability of the test of direct observation of procedural skills in the evaluation of clinical skills of nursing students of Zahedan College of Nursing and Midwifery”. In this study, the lowest and highest value of the correlation coefficient in reliability between evaluators was 0.42 and 0.84, respectively, which were significant in all cases [15]. Also, according to the systematic studies conducted by Habibi et al., it was concluded that the reliability of the DOPS test has a very good validity [4]. According to the results of this research, it can be concluded that the DOPS test for the objective measurement of clinical skills in speech therapy has appropriate validity and reliability and is applicable from the point of view of students and professors. This method uses direct observation and provides feedback, improving the quality of treatment services provided in speech therapy. The existence of such an evaluation tool leads professors to pay more attention to the implementation of the desired clinical procedure by students. The student also receives appropriate feedback to correct the shortcomings of his clinical work, which leads to a more accurate assessment of the patient. Based on the assessment, better services can be provided to the patient. Correct and accurate implementation of this method leads to a proper connection between science and student performance. The lack of an objective tool reduces the possibility of valid and reliable evaluation in clinical examinations, especially during the study period of speech therapy students. Considering that this test method and content are directly related to clinical practice, it positively affects student learning. Therefore, it is recommended that professors use this method to evaluate students’ performance at the bedside because instead of general comments, feedback is based on real and objective behaviors.

5. Conclusion

The research results showed that the DOPS test has appropriate validity and reliability for the objective measurement of clinical skills in the evaluation of speech organs in the field of speech therapy. Students and professors declared that this tool is suitable, and due to direct observation and feedback, the presentation can improve the quality of education and medical services presented by speech therapy students. The existence of such an evaluation tool leads professors to pay more attention to the implementation of the desired clinical procedure by students, and the student also receives appropriate feedback to correct the shortcomings of their clinical work, this leads to a more accurate assessment and better services.

Ethical Considerations

Compliance with ethical guidelines

To comply with the ethical principles, the examiner introduced himself to each participant and presented the official letter. Then, the researcher gave the necessary explanations about the test and how to complete it (Code: IR.IUMS.REC.1400.260).

Funding

This article was extracted from the master’s thesis of Seyede Zahra Hosseini Kalej approved by Department of Speech and Language Pathology, School of Rehabilitation Sciences, Iran University of Medical Sciences, Tehran, Iran.

Authors' contributions

Conceptualization and study design: Seyede Zahra Hosseini Kalej, Younes Amiri Shavaki, and Mohammad Sadegh Jenabi; Data collection, and drafting the manuscript: Seyede Zahra Hosseini Kalej; Data analysis: Jamileh Abolghasemi; Critical revision of the article and final approval: Younes Amiri Shavaki, and Mohammad Sadegh Jenabi; Data interpretation: Seyede Zahra Hosseini Kalej, Younes Amiri Shavaki, Jamileh Abolghasemi, and Mohammad Sadegh Jenabi; Revision of the article: Younes Amiri Shavaki.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors thank the Department of Speech and Language Pathology and the participants and evaluators.

References

Evaluation is one of the crucial aspects of educational activities that transform education from a static state to a dynamic process. The results of the evaluation help to identify the positive aspects and weaknesses of the training path, which can be useful in changing and fixing the defects and thus making educational changes and corrections. Therefore, one of the critical goals of evaluation is to increase the quality and productivity of education [1, 2]. Evaluating students’ clinical performance provides information to judge students’ skills related to clinical work. Therefore, evaluating students’ clinical performance is considered one of the complex tasks of professors and clinical instructors for health professions [3, 4]. Since professional speech therapy is practical, students need knowledge, information, and various psychomotor skills to have a proper clinical performance. Therefore, a specific evaluation and test program is implemented to judge the student’s competence in the practical skill. Improving the educational process at all levels is related to continuous evaluations and the necessary interventions based on their results. It is due to such effects that the use of tested and more accurate methods is emphasized by experts [5]. Many common clinical assessment methods cannot fully assess students in clinical settings and only evaluate the small amount of information obtained after a short-term pre-examination study. Therefore, the student cannot identify the defects and try to correct them [6, 7, 8]. Currently, methods, such as objective structured clinical examination (OSCE), portfolio, mini-clinical evaluation exercise (Mini-CEX), and direct observation of procedural skills (DOPS), which are performance-based, are recommended to evaluate students’ procedural skills [9]. Considering that speech therapy is a practical profession, evaluation by direct observation of clinical skills in the real clinical environment ensures the ability of students to provide appropriate clinical services and face clinical events in special patient conditions [10].

Articulation of speech sounds is necessary to express words and sentences. Without speech organs, articulation of speech sounds is impossible. Therefore, the assessment of speech organs is a critical part of a complete evaluation. Oral examination and interpretation of results require basic science and knowledge of the anatomy and physiology of the oral structure. The goal of this assessment is to identify or rule out structural or functional factors associated with different types of communication or swallowing disorders. In the evaluation of speech organs of the building, the range of motion and speed and strength of each organ, such as lips, teeth, tongue, jaw, and soft palate, are of interest to the examiner. The examiner must have comprehensive knowledge and clinical skills related to the structure and function of speech organs.

This study was conducted to prepare a DOPS tool to evaluate students’ skills in the assessment of speech organs.

2. Materials and Methods

The research population included the speech therapy students of the Faculty of Rehabilitation Sciences, Iran University of Medical Sciences, who were undergoing clinical internship units. The data were collected via the convinience sampling method. The participants included 20 students. This study was cross-sectional with a non-interventional descriptive-analytical method. To collect data, the checklist of clinical skills evaluation form was used through direct observation. To prepare the DOPS evaluation form, the Robbins-Kelly oral motor control instructions were used. Then, the desired DOPS form items were determined and scrutinized according to available literature and also using the opinions of the faculty members of speech therapy specialists. The number of selected items was 18 from 21 predetermined items. To check the content validity, the opinions of ten experts (7 speech therapy faculty members and 3 doctoral students of speech therapy of the Faculty of Rehabilitation Sciences) were used.

Each item’s clarity, simplicity, and importance were determined for face validity. The impact score index was used, which was calculated for each item separately. To check the ratio of content validity to necessity and usefulness and to check the content validity index, the simplicity, clarity, and relevance of the checklist questions were investigated. To determine the reliability, the agreement between two expert evaluators and speech therapy faculty members was used. Evaluators observed any student’s performance and judged their clinical skill by determining a score between zero and ten according to the prepared form. Score 0 was equal to unacceptable, scores 1-3 mean lower than expected, scores 4-6 mean borderline, scores 6-9 mean within expected limits, and score 10 was above expected. The data obtained from the questionnaires was extracted and statistically analyzed by SPSS software, version 25. The reliability coefficient and the internal correlation coefficient were used.

A briefing session was held to train the examiners, and the examiner’s guide in the DOPS evaluation was in written form. Scoring instructions, a checklist guide, and the necessary criteria were provided to examiners. This instruction was provided for more reliability and homogenization of the examiners’ judgment. The participants were trained in the form of a written guide, including the research objectives, the DOPS evaluation method, the type of procedures, the names of the examiners, and the skills evaluation checklist in one session. Whenever the students felt that they had acquired the necessary competence in the relevant skill, the examiner was asked to evaluate their performance. Each test took approximately 15 minutes, and after completion, about 5 minutes were spent providing feedback to the students to discuss their strengths and weaknesses. Finally, their level of satisfaction was examined by the final part of the checklist that measures the satisfaction of students and evaluators.

3. Results

Face validity

The face validity of the DOPS test was confirmed in evaluating the procedural skills of a real patient according to the opinions of experts in the field of speech therapy. According to Table 1, the degree of simplicity, clarity, and importance of the test questions was examined to determine the face validity of the items.

Then, their impact score was calculated. As seen in Table 2, the impact scores of all the items were >1.5; therefore, they are favorable regarding face validity and were included in the questionnaire.

Content validity

In this study, according to Table 3, among the 21 test questions, for three questions, the value of the content validity ratio was <0.62, the content validity index was <0.80, and these questions were removed.

In the rest of the items (85%), the content validity of the DOPS test was calculated. Each item’s content validity index (CVI) was over 0.8, and the content validity ratio (CVR) for each item was over 0.62. According to the Lawshe table, calculated CVI and CVR were favorable regarding content validity.

Reliability

To determine the reliability (agreement between evaluators) that two evaluators were used simultaneously, the reliability coefficient and internal correlation coefficient were used. The intraclass correlation coefficient (ICC) was calculated using the opinions of two evaluators for 20 students, and the ICC value was 884/ 0 with a 95% confidence interval (0.708-0.954), (P<0.001), which indicates a good agreement between the raters and a reason for good reliability (Table 4).

Finally, the DOPS tool examined the percentage of satisfaction of students and evaluators in evaluating the clinical skill of assessing the speech organs. According to Table 5, all students chose the option of slightly satisfied to completely satisfied, and the largest percentage (70%) for the options was “high satisfaction” to “complete satisfaction”.

According to Table 6, none of the evaluators chose the options of no satisfaction to “slightly satisfied”, and the majority of satisfaction was between the options of “high satisfaction” and “full satisfaction”.

As a result, the students were satisfied with the evaluation of the clinical skill of evaluating the speech organs in the field of speech therapy with the DOPS tool, and also the evaluators were satisfied with the evaluation of the clinical skill of the students of the speech therapy field with the DOPS tool.

4. Discussion

The present study›s results confirm the validity and reliability of the DOPS test performed on speech therapy students. In this test, experts in speech therapy have been used for face validity. They have confirmed the evaluation of clinical skills through DOPS on a real patient, which is consistent with most studies conducted in this field. Rozbahani et al. investigated the validity and reliability of the DOPS test in evaluating the clinical skills of audiology students at the Iran University of Medical Sciences. The face validity of the DOPS test in evaluating students’ procedural skills while working with a real patient was confirmed by extracting the opinions of audiologists [11]. In a study conducted by Wilkinson et al. at the Royal College of Medicine in England regarding the validity of the DOPS test in educational programs, the experts concluded that the DOPS has high face validity [12]. In this research, on the topic of content validity, among 21 questions, for three questions, the value of CVR was <0.62, and CVI was <0.8, therefore these questions were removed. The CVI value of questions (85%) is 0.8 or more, and they are favorable regarding content validity. This result is based on the study conducted by Sarviyeh et al. at the Faculty of Nursing and Midwifery of Kashan University of Medical Sciences. In its results, the content validity of the DOPS test using the content validity index is >0.75, and the content validity ratio is >0.50, which is reported as consistent [22]. It is also consistent with the results of a study conducted by Jalili et al. in Iran to evaluate nursing students’ clinical skills using the DOPS method. This study showed that the DOPS test is a suitable method to evaluate psychological skills. Due to its high validity, reliability, and acceptance, it is suitable to evaluate all aspects of students’ performance [13]. Also, the results of this research regarding content validity are consistent with a study conducted by John-Roger Barton et al. in England to screen colon cancer with the DOPS test. Its validity and reliability was 0.81 [14].

In this study, all the students have chosen options from slightly satisfied to completely satisfied, and the largest number (70%) have chosen options from high satisfaction and complete satisfaction. Also, none of the evaluators chose the options of no satisfaction to slightly satisfied, and their choice was between the options of high satisfaction and complete satisfaction. These results are consistent with the study conducted by Sahebalzamani et al. in the field of nursing at Zahedan University to study and research the acceptability of DOPS, which showed that 75% of faculty members and 70% of students were satisfied with the test. It seems that DOPS effectively evaluates clinical skills and is also accepted among faculty members and students [15]. Also, the results of this research regarding satisfaction with Farajpour et al.’s study titled “satisfaction of medical interns and professors with the implementation of the DOPS test at the Islamic Azad University of Mashhad in 2013” showed that the feasibility, educational effects, and satisfaction were significantly high from the student’s point of view. Satisfaction from the point of view of examiners also had a significantly high score, which is consistent [16]. In this study, two speech therapists were used for inter-rater reliability. The ICC was calculated using the opinions of two evaluators. The ICC value was 0.884 with a 95% confidence interval (0.708-0.954) (P<0.001). These results indicate that the test is reliable and an appropriate agreement exists between the raters. This result is consistent with the study conducted by Sahib Sahebalzamani et al. entitled “validity and reliability of the test of direct observation of procedural skills in the evaluation of clinical skills of nursing students of Zahedan College of Nursing and Midwifery”. In this study, the lowest and highest value of the correlation coefficient in reliability between evaluators was 0.42 and 0.84, respectively, which were significant in all cases [15]. Also, according to the systematic studies conducted by Habibi et al., it was concluded that the reliability of the DOPS test has a very good validity [4]. According to the results of this research, it can be concluded that the DOPS test for the objective measurement of clinical skills in speech therapy has appropriate validity and reliability and is applicable from the point of view of students and professors. This method uses direct observation and provides feedback, improving the quality of treatment services provided in speech therapy. The existence of such an evaluation tool leads professors to pay more attention to the implementation of the desired clinical procedure by students. The student also receives appropriate feedback to correct the shortcomings of his clinical work, which leads to a more accurate assessment of the patient. Based on the assessment, better services can be provided to the patient. Correct and accurate implementation of this method leads to a proper connection between science and student performance. The lack of an objective tool reduces the possibility of valid and reliable evaluation in clinical examinations, especially during the study period of speech therapy students. Considering that this test method and content are directly related to clinical practice, it positively affects student learning. Therefore, it is recommended that professors use this method to evaluate students’ performance at the bedside because instead of general comments, feedback is based on real and objective behaviors.

5. Conclusion

The research results showed that the DOPS test has appropriate validity and reliability for the objective measurement of clinical skills in the evaluation of speech organs in the field of speech therapy. Students and professors declared that this tool is suitable, and due to direct observation and feedback, the presentation can improve the quality of education and medical services presented by speech therapy students. The existence of such an evaluation tool leads professors to pay more attention to the implementation of the desired clinical procedure by students, and the student also receives appropriate feedback to correct the shortcomings of their clinical work, this leads to a more accurate assessment and better services.

Ethical Considerations

Compliance with ethical guidelines

To comply with the ethical principles, the examiner introduced himself to each participant and presented the official letter. Then, the researcher gave the necessary explanations about the test and how to complete it (Code: IR.IUMS.REC.1400.260).

Funding

This article was extracted from the master’s thesis of Seyede Zahra Hosseini Kalej approved by Department of Speech and Language Pathology, School of Rehabilitation Sciences, Iran University of Medical Sciences, Tehran, Iran.

Authors' contributions

Conceptualization and study design: Seyede Zahra Hosseini Kalej, Younes Amiri Shavaki, and Mohammad Sadegh Jenabi; Data collection, and drafting the manuscript: Seyede Zahra Hosseini Kalej; Data analysis: Jamileh Abolghasemi; Critical revision of the article and final approval: Younes Amiri Shavaki, and Mohammad Sadegh Jenabi; Data interpretation: Seyede Zahra Hosseini Kalej, Younes Amiri Shavaki, Jamileh Abolghasemi, and Mohammad Sadegh Jenabi; Revision of the article: Younes Amiri Shavaki.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors thank the Department of Speech and Language Pathology and the participants and evaluators.

References

- Chehrzad M, Shafiei Pour S, Mirzaei M, Kazemnejad E. [Comparison between two methods: Objective structured clinical evaluation (OSCE) and traditional on nursing students’ satisfaction (Persian)]. J Guilan Univ Med Sci. 2004; 13(50):8-13. [Link]

- Sarviyeh M, Akbari H, Mirhosseini F, Baradaran H, Hemati A, Kohpayezadeh J. [Validity and reliability of direct observation of procedural skills in evaluating clinical skills of midwifery students of Kashan nursing and midwifery school (Persian)]. J Sabzevar Univ Med Sci. 2014; 21(1):145-54. [Link]

- Farajpour A, Amini M, Pishbin E, Mostafavian Z, Akbari Farmad S. Using modified direct observation of procedural skills (DOPS) to assess undergraduate medical students. J Adv Med Educ Prof. 2018; 6(3):130-6. [PMID] [PMCID]

- Habibi H, Khaghanizade M, Mahmoodi H, Ebadi A, Seyedmazhari M. [Comparison of the effects of modern assessment methods (DOPS and mini-CEX) with traditional method on nursing students' clinical skills: A randomized trial (Persian)]. Iran J Med Educ. 2013; 13(5):364-72. [Link]

- Delaram M, Tootoonchi M. [Comparing self-and teacher-assessmentin obstetric clerkshipcourse for midwifery students of Shahrekord University of Medical Sciences (Persian)]. Iran J Med Educ. 2009; 9(3):231. [Link]

- Schoonheim-Klein M, Walmsley AD, Habets L, van der Velden U, Manogue M. An implementation strategy for introducing an OSCE into a dental school. Eur J Dent Educ. 2005; 9(4):143-9. [DOI:10.1111/j.1600-0579.2005.00379.x] [PMID]

- Noohi E, Motesadi M, Haghdoost A. [Clinical teachers’ viewpoints towards objective structured clinical examination in Kerman University of Medical Science (Persian)]. Iran J Med Educ. 2008; 8(1):113-20. [Link]

- Rushforth HE. Objective structured clinical examination (OSCE): Review of literature and implications for nursing education. Nurse Educ Today. 2007; 27(5):481-90. [DOI:10.1016/j.nedt.2006.08.009] [PMID]

- kariman N, Heidari T. [The effect of Portfolio’s evaluation on learning and satisfaction of midwifery students (Persian)]. J Arak Uni Med Sci 2010; 12(4):81-8. [Link]

- Lörwald AC, Lahner FM, Greif R, Berendonk C, Norcini J, Huwendiek S. Factors influencing the educational impact of Mini-CEX and DOPS: A qualitative synthesis. Med Teach. 2018; 40(4):414-20. [DOI:10.1080/0142159X.2017.1408901] [PMID]

- Alborzi R, Koohpayehzadeh J, Rouzbahani M. [Validity and reliability of the persian version of direct observation of procedural skills tool in audiology (Persian)]. Rehabil Med. 2021; 10(2):346-57. [DOI:10.22037/JRM.2020.113471.2372]

- Wilkinson JR, Crossley JG, Wragg A, Mills P, Cowan G, Wade W. Implementing workplace-based assessment across the medical specialties in the United Kingdom. Med Educ. 2008; 42(4):364-73. [DOI:10.1111/j.1365-2923.2008.03010.x] [PMID]

- Jalili M, Imanipour M, Nayeri ND, Mirzazadeh A. Evaluation of the nursing students’ skills by DOPS. J Med Educ. 2015; 14(1):e105420. [DOI:10.22037/jme.v14i1.9069]

- Barton JR, Corbett S, van der Vleuten CP; English Bowel Cancer Screening Programme; UK Joint Advisory Group for Gastrointestinal Endoscopy. The validity and reliability of a Direct Observation of Procedural Skills assessment tool: Assessing colonoscopic skills of senior endoscopists. Gastrointest Endosc. 2012; 75(3):591-7. [DOI:10.1016/j.gie.2011.09.053] [PMID]

- Sahebalzamani M, Farahani H. Validity and reliability of direct observation of procedural skills in evaluating the clinical skills of nursing students of Zahedan nursing and midwifery school. Zahedan J Res Med Sci. 2012;14(2):e93588. [Link]

- Farajpour A, Amini M, Pishbin E, Arshadi H, Sanjarmusavi N, Yousefi J. et al . [Teachers’ and students’ satisfaction with DOPS examination in Islamic Azad University of Mashhad, a study in year 2012 (Persian)]. Iran J Med Educ. 2014; 14(2):165-73. [Link]

Type of Study: Research |

Subject:

Speech Therapy

Received: 2023/02/5 | Accepted: 2024/01/3 | Published: 2023/02/15

Received: 2023/02/5 | Accepted: 2024/01/3 | Published: 2023/02/15